Performance Evaluation

Contents

Performance Evaluation#

Evaluation Methodology#

Model execution produces a 1-km grid SWE estimates across the Sierra Nevada Mountains and we can use the Standardized Snow Water Equivalent Evaluation tool (SSWEET) to perform a comphrehensive model evaluation against NASA ASO and snow course surveys.

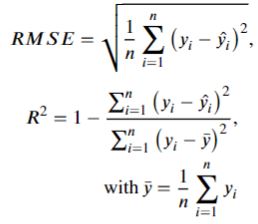

SSWEET uses standard model evaluation metrics to guage model performance (i.e., Percent Bias (PBias, Coefficient of Determination (R2), Kling-Gupta Efficiency (KGE), and root mean squared error (RMSE)) and includes several methods to investitage the impacts of temporal-spatial characteristics influence model skill, ultimately to help refine model skill.

PBias is a metric communicating the average tendency of the simulated values to be larger or smaller than their observed ones. The optimal value of PBias is 0.0, with low-magnitude values indicating accurate model simulation.

The coefficient of determination (R2) is a unitless measurement of the proportion of explained variance of the target variable by the model. A maximum R2 score of 1.0 indicates the predictor variables explain 100 percent of the variation in the target. A greater R2 and lower RMSE represent better model predictive performance.

KGE is simply the Euclidean distance computed using the coordinates of bias, standard deviation, and correlation. Similar to the coefficient of determination, values closer to 1 indicate greater model skill and due to the calculation of KGE, it will be lower than the bias, standard deviation, and correlation.

RMSE is the quadratic mean of the differences between the observation and predictions, or residuals. RMSE aggregate the magnitudes of the residuals for all data points into a single measure of average model predictive power, with RMSE communicating the accuracy of the model. Note, RMSE is scale dependent.

Preliminary Model Evaluation#

Preliminary model evaluation is on the 25% held out testing data, where the known previous SWE values from NASA ASO flights support the previous SWE feature during model inference. The following evaulation uses SSWEET to analyze model performance and statistically benchmark improvements compared to the current NSM.

Model Training/Testing influences and Bias on Model Performance.#

The model training/testing partitioning methodology has a strong influence on model performance and the goal of model evaluation. The objective of the modeling effort was to examine the spatial extrapolation capacity of the model from selected monitoring stations to the overall region, best suited to a 75/25% training/testing split, respectively.

While it is critical to address the strong serial correlation in SWE accumulation and melt throughout the season, the high correlation between weeks has the potential to inflate model skill when using a 75/25% training/testing split due to the previous SWE feature being known. An assessment of the operational capacity of the model is different than assessing the ability to extrapolate regional SWE from in-situ monitoring stations and is the reason WY 2019 was held out of the training data as it will form the final model validation.

%pip install myst-nb xarray contextily rioxarray pandas==1.4.3 h5py tqdm tables scikit-learn rasterio geopandas==0.10.2 seaborn tensorflow progressbar hydroeval folium==0.12.1.post1 vincent hvplot==0.8.0 nbformat==5.7.0 matplotlib basemap numpy

import os

import pandas as pd

import warnings

import SSWEET

import Hindcast_Initialization

warnings.filterwarnings("ignore")

#Set working directories

#cwd = os.getcwd()

#os.chdir("..")

#os.chdir("..")

#datapath = os.getcwd()

cwd ='/home/jovyan/shared-public/snow-extrapolation-web/'

Load your model data#

As long as model development followed the prescribed template, the SSWEET.load_Predictions() function will correctly load and process model predictions and observations.

#Get datetime and corresponding background information to evaluate hindcast

#Need to load predictions2022-09-24.h5, 2019_predictions.h5 (if straight to here and did not make predictions)

new_year = '2019'

threshold = '20.0'

Region_list = ['N_Sierras','S_Sierras_High', 'S_Sierras_Low']

datelist = Hindcast_Initialization.Hindcast_Initialization(cwd, cwd, new_year, threshold, Region_list)

EvalDF = Hindcast_Initialization.HindCast_DataProcess(datelist,Region_list,cwd, cwd)

Parity Plot#

A parity plot is a scatterplot that compares a set of model estimates against benchmark data, i.e., the observations. Each point has coordinates (x, y), where x is a benchmark value and y is the corresponding value from the model. A parity plot is often the first visualization to investigate the skill of a model.

SSWEET.parityplot(EvalDF)

More in-depth model evaluation.#

We want to evaluate the model over the course of seasonal snow accumulation and melt, at differnt elevation bands, and spatially.

Using SSWEET, the Model_Vs() function supports an in-depth evaluation of multiple model skill influencing components to conduct a robust and comprehensive evaluation of a model. The Model_Vs() function takes the following inputs: Model_Vs(RegionTest,variable,Model Output, datapath), where

RegionTest is the prediction dataframe saved from the training notebook.

variable is the variable of interest. Currently supported variables include Water Year Week (WYWeek), Elevation (elevation_m), Previous SWE Estimate (prev_SWE), Latitude (Lat), and Northness (northness), and Error due to Previous SWE Estimate (prev_SWE_error).

Model Output refers to one of three model outputs or processed model outputs including: Predictions (Prediction), Error (Error) which is the physical difference between each prediction and observation, and Percent Error (Percent_Error) which is Error divided by the Observation and multiplied by 100%.

The following examples demonstrate the utilty of the SSWEET and the Model_Vs() function.

Error over time#

SSWEET.Model_Vs(EvalDF,'WYWeek', 'Error', cwd)

Error compared to elevation#

SSWEET.Model_Vs(EvalDF,'elevation_m', 'Error', cwd)

Percent Error compared to elevation#

*note, error greater than |100%| is adjusted to |100%|

SSWEET.Model_Vs(EvalDF,'elevation_m', 'Percent_Error', cwd)

Model error compared to northness#

SSWEET.Model_Vs(EvalDF,'northness', 'Error', cwd)

Interactive Spatial Plotting#

While fitures support evaluation, spatially examining model performance has several benefits adn the Map_Plot_Eval function() supports a spatial method to evaluate model skill. The Map_Plot_Eval(datapath, RegionTest, yaxis, error_metric) function supports three different evaluation metrics: ‘KGE’, ‘cm’, or ‘%’. The physical error (cm) illustrates how close the predictions are to the observed, the mean percentage error (%) illustrates the perentagewise prediction accuracy, and KGE (KGE) illustrates the mean, variance and correlation on model performance, however, it is only useful for sites with multiple predictions.

Spatially evaluating the Model via KGE#

Selecting the prediction where there was a previous SWE value demonstrates significanly increased KGE. Note, the blue icons are the SNOTEL sites used to inform predictions and are more visible now. When running interactively, clicking on the SNOTEL icon shows the SNOTEL site information and allwos for an investigation of errors stemming from the proximity of the prediction locations to in situ observations.

#Folium plot

SSWEET.Map_Plot_Eval(cwd, EvalDF,'SWE (cm)', 'KGE')

Spatially evaluating the Model via error in percentage from the observed#

We can now see that the model can spatially extrapolate and that there is a significant need for improved temporal resolution data. For this case, KGE does not provide a useful method to evaluate the model. Alternatively, the Map_Plot_Eval() function supports percent error (%) and physical error (cm).

#Folium plot

SSWEET.Map_Plot_Eval(cwd, EvalDF,'SWE (cm)', '%')

Spatially evaluating the Model via error in cm#

#Folium plot

SSWEET.Map_Plot_Eval(cwd, EvalDF,'SWE (cm)', 'cm')

Other examples of using SSWEET to investigate model performance#

SSWEET.Model_Vs(EvalDF,'prev_SWE_error', 'Error', cwd)

SSWEET.Model_Vs(EvalDF,'Lat', 'Error', cwd)

Exploring the Prediction results#

While model error is a critical metric to gauge model skil, simple checks on model predictions can support the use of a model or high areas for improvement. For example, it montane regions, SWE generally increases in elevation up to the apline (or above treeline). Capturing this trend is important and a critical element of model evaluation. Once above treeline (~2,900 m in the Sierra Nevada mountains), SWE distribution is significantly affected by overall and microclimate wind speed and direction, leaving some high altitude regions completly bare and others with large amounts of SWE. Note, many of these aspects are scale dependent (e.g., a 50 m, 250 m, 1 km, and 25 km model will vary significantly due to varying geopatial characteristics and key hydrological processes).

SSWEET.Model_Vs(EvalDF,'elevation_m', 'Prediction', cwd)

Effective modeling of elevation gradients leads to a realistic modeled SWE across heterogeneous terrain. For example, the higher elevation bands display greater modeled SWE values compared to lower elevations, and the highest elevation bands display less SWE reflecting exposed terrain subject to wind transport and snow redistribution. This is indicative that the model is capable of generalizing topographical and geographical characteristics to effectivley translate elevation gradients on SWE.

SSWEET.Model_Vs(EvalDF,'northness', 'Prediction', cwd)

While the model uses northness as a feature to represent the average slope and aspect of the 1-km grid, overlaying the predictions on complex topography indicates a need for high resolution prediction. Examination of the 1-km resolution indicates it is too coarse to capture rapid topographical changes common to montane environments, but does indicate an increas in northness lead to an increase in SWE. Investigating the distribution of observation/predictions indicates there is a need to a larger, more comprehensive training dataset to capture key influences on SWE distribution in montane environments.

SSWEET.Model_Vs(EvalDF,'Lat', 'Prediction', cwd)